Central vestibular tuning arises from patterned convergence of otolith afferents

Liu, Z., Kimura, Y., Higashijima, S. I., Hildebrand, D. G. C., Morgan, J. L. & Bagnall, M. W., Nov 25 2020, In: Neuron. 108, 4, p. 748-762.e4

Abstract

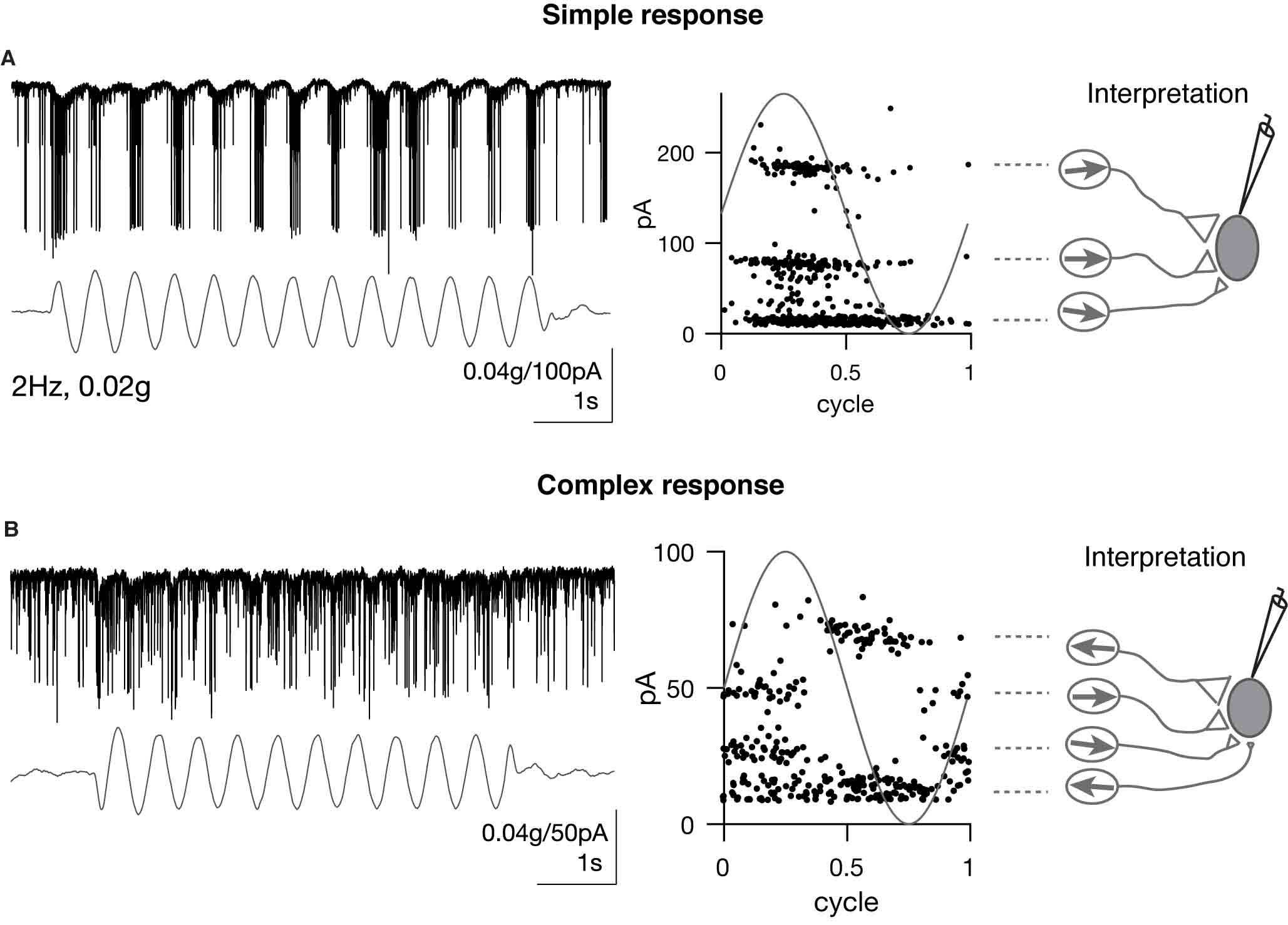

As sensory information moves through the brain, higher-order areas exhibit more complex tuning than lower areas. Though models predict that complexity arises via convergent inputs from neurons with diverse response properties, in most vertebrate systems, convergence has only been inferred rather than tested directly. Here, we measure sensory computations in zebrafish vestibular neurons across multiple axes in vivo. We establish that whole-cell physiological recordings reveal tuning of individual vestibular afferent inputs and their postsynaptic targets. Strong, sparse synaptic inputs can be distinguished by their amplitudes, permitting analysis of afferent convergence in vivo. An independent approach, serial-section electron microscopy, supports the inferred connectivity. We find that afferents with similar or differing preferred directions converge on central vestibular neurons, conferring more simple or complex tuning, respectively. Together, these results provide a direct, quantifiable demonstration of feedforward input convergence in vivo.

Department mini interview

The field of neuronal computation has assumed that information complexity emerges along a neuronal circuit as a result of the convergence of simpler inputs. By virtue of this convergence theory, higher-order areas acquire more complex tuning because their activity is conditioned by the combined properties of a diverse set of less complex inputs. The problem is that we inherited this view from computational models and it had actually not been measured in biological systems. Is that true? Why?

Martha Bagnall: Exactly right. To build a good model of how a neuronal computation occurs, ideally you would measure a lot of different things: the number of inputs, synaptic weights, the tuning or activity of the presynaptic population, and the tuning of the postsynaptic population. We can measure all those things–but in very different experiments. So we have had to make assumptions about how they go together in order to build models of complex central tuning, and we haven’t been able to test those assumptions. In our experiments, we were able to measure all those parameters simultaneously due to specific properties of the synaptic connections, which rely on electrical transmission. We were able to parse out different synaptic inputs by their characteristic amplitudes, and from there deduce presynaptic tuning as well. As a result we could put everything together to show that, indeed, feedforward convergent excitation can drive complex central responses.

Can you explain why the vestibular system of the zebrafish is such an ideal system to take on that challenge?

Zhikai Liu: The biggest advantage of using larval zebrafish is in vivo! They have very small and transparent brains. It allows us to do recordings that were never attempted before in other model organisms. Also their vestibular system becomes functional at a young age. I can plan things just a few days before the experiment. It is really convenient for me to adapt and improvise my strategy because the cost is quite minimal.

You found that not only does complexity of tuning in a vestibular neuron indeed arise from the diversity of inputs it receives, as a general rule, but that, in fact, the said complexity can be inferred/predicted from these inputs. How does this enrich existing models?

Zhikai Liu: Great question! The convergence pattern or input specificity to central vestibular neurons were almost entirely unknown in previous studies. Thus the origin of the tuning complexity was still debatable. In existing models, many assumptions were made to model central vestibular tuning, such as input identity, tuning, strength and convergence number. In my experiments, I directly measured these parameters. Therefore, it solidified the afferent feedforward model, in which inhibition, recurrent excitation are not needed. One caveat is that the amplitude and frequency range we applied were limited because of the destructive nature of the vestibular stimulus, it would be interesting to explore whether this model holds true with vestibular stimuli of a wider range.

Your lab is interested in the neural mechanisms of body posture. What do these findings teach us about the neuronal computation that underlies this phenomenon?

Martha Bagnall: First, it’s built from simple components. This makes a lot of sense–posture is vital for survival, so it needs to develop early and robustly. Second, the head tilt information is getting recombined in different ways as soon as it gets into the brainstem–the central encoding is more complicated than out in the periphery. We think this is because these vestibulospinal neurons project directly to the spinal cord, so the transformation into motor coordinates has to happen rapidly.

In your study, movement was imposed onto the fish thanks to an impressive, custom-made in vitro system that imposes on-demand translational acceleration to the fish – all while simultaneously recording with whole-cell electrophysiology. Quite an achievement! This allows a remarkable control of the motion stimuli. But this is also more akin to passive movement. Do you think your results are also applicable to self-generated body motion, or could different computational rules apply?

Martha Bagnall: We are certain that the rules change when the fish generates its own body movement. There’s a big mystery in the field right now: we know that the inputs to these neurons, the vestibular afferents, still encode head movement whether it’s expected or unexpected. But the vestibulospinal neurons, and others like them, selectively suppress responses to self-generated head movements. No one is quite sure how this works! From an extensive serial EM database we’re analyzing, it’s clear that the vestibulospinal neurons get a lot of other inputs besides the vestibular afferents, so we think that might be a good place to start looking.